Load balancers are an inextricable part of working in a cloud environment. Whenever high availability is needed, you will likely need a load balancer to even out the performance. But what is a load balancer, how does it work, and which do you choose?

This article examines just that, specifically focusing on AWS load balancer types and what they offer.

What Is Load Balancing, And How Does It Work?

According to AWS, load balancing is a method of distributing “network traffic equally across a pool of resources that support an application.” Many applications must process millions of users simultaneously, returning the correct results for each request without sacrificing speed or reliability.

Handling the increased traffic volume requires the apps to have many resource servers that share duplicate data.

The load balancer sits between the server network and the user, quietly facilitating the equal use of all resource servers. This dramatically improves the app’s performance, security, scalability, and availability.

How It Works

Load balancers are used to monitor the backend resources’ health and prevent the transmission of requests to servers that cannot handle them. It can be:

- A software process, a virtual instance running on dedicated hardware, or a physical device.

- Used to leverage many load balancing algorithms to distribute traffic following requirements.

- Integrated with ADCs (Application Delivery Controllers) designed to boost performance and security in microservices-based and three-tier web apps, no matter where they’re hosted.

AWS wants you to think of the load balancer as a restaurant manager with waitstaff to manage. If the customers choose who they’re served by, some waiters would be overwhelmed while others would be idle. The load balancer is like the manager, who assigns customers to specific waitstaff to avoid the imbalance.

The scalability that load balancing offers allows organizations and businesses to handle many requests from modern multi-device workflows and apps efficiently.

Load Balancing Algorithms

Load balancing algorithms are a set of rules used by the load balancer to determine which server is best for varying client requests. There are two main categories of load-balancing algorithms:

Static Load Balancing

In this format, the rules are fixed and independent of the current server state. Under this, we have sub-variants that include:

- Round-robin method – Instead of specialized hardware or a software process, the round-robin technique uses an authoritative name server to do load balancing. The name server delivers the IP addresses of each server in the server farm in a round-robin method.

- Weighted round-robin method – You can set various weights to each server in weighted round-robin load balancing depending on their urgency or capability. The name server will forward more inbound application traffic to higher-weight servers.

- IP hash method – The load balancer uses the IP hash technique to execute a mathematical calculation on the client IP address, known as hashing. It translates the client IP address into a number, which is subsequently assigned to specific servers.

Dynamic Load Balancing

In dynamic load balancing, the algorithms look at the current state of the servers before traffic distribution. Some methods include:

- Least response time method – To find the optimal server, the least response time approach combines the server response time and the active connections to route traffic.

- Least connection method – The load balancer uses the least connection approach to determine which servers have the fewest active connections and routes traffic to them. The system assumes all connections need the same amount of processing power from all servers.

- Weighted least connection method – Weighted least connection methods assume that specific servers can manage more active connections than others. As a result, various weights or capacities can be assigned to each server, and the load balancer delivers incoming client requests to the server with the fewest connections by capacity.

- Resource-based method – Load balancers distribute traffic in a resource-based way by monitoring the current server load. Every server has specialized software known as an agent, which calculates the consumption of server resources such as compute capacity and memory. The load balancer then queries the agent for available resources before directing traffic to that server.

AWS Load Balancer Types

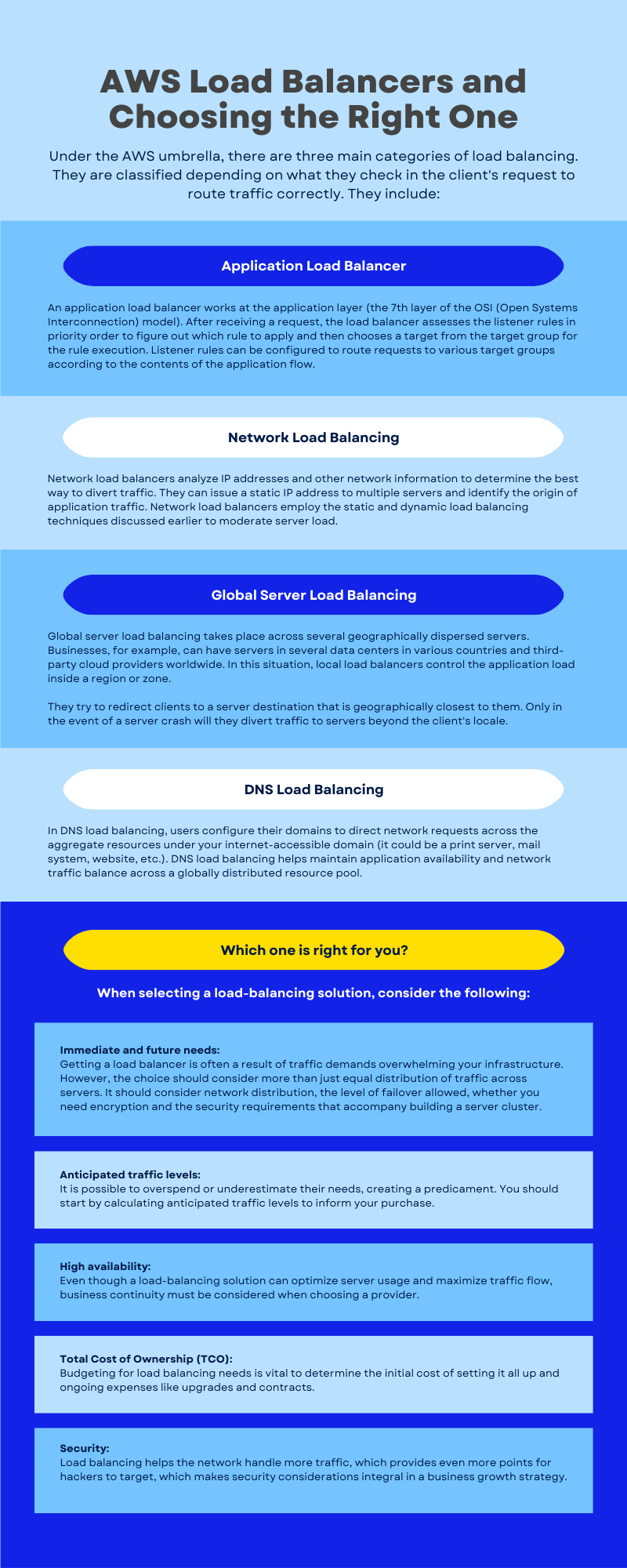

Under the AWS umbrella, there are three main categories of load balancing. They are classified depending on what they check in the client’s request to route traffic correctly. They include:

Application Load Balancer

An application load balancer works at the application layer (the 7th layer of the OSI (Open Systems Interconnection) model). After receiving a request, the load balancer assesses the listener rules in priority order to figure out which rule to apply and then chooses a target from the target group for the rule execution.

Listener rules can be configured to route requests to various target groups according to the contents of the application flow.

Regardless of whether a target is listed with multiple target groups, routing is handled individually for each target group. The routing algorithm utilized can be configured at the target group level. The round-robin routing technique is the default; however, you can pick the routing algorithm with the fewest outstanding requests.

Network Load Balancing

Network load balancers analyze IP addresses and other network information to determine the best way to divert traffic. They can issue a static IP address to multiple servers and identify the origin of application traffic. Network load balancers employ the static and dynamic load balancing techniques discussed earlier to moderate server load.

Global Server Load Balancing

Global server load balancing takes place across several geographically dispersed servers. Businesses, for example, can have servers in several data centers in various countries and third-party cloud providers worldwide. In this situation, local load balancers control the application load inside a region or zone.

They try to redirect clients to a server destination that is geographically closest to them. Only in the event of a server crash will they divert traffic to servers beyond the client’s locale.

DNS Load Balancing

In DNS load balancing, users configure their domains to direct network requests across the aggregate resources under your internet-accessible domain (it could be a print server, mail system, website, etc.). DNS load balancing helps maintain application availability and network traffic balance across a globally distributed resource pool.

Which One Is Right for You?

When selecting a load-balancing solution, consider the following:

- Immediate and future needs – Getting a load balancer is often a result of traffic demands overwhelming your infrastructure. However, the choice should consider more than just equal distribution of traffic across servers. It should consider network distribution, the level of failover allowed, whether you need encryption and the security requirements that accompany building a server cluster.

- Anticipated traffic levels – It is possible to overspend or underestimate their needs, creating a predicament. You should start by calculating anticipated traffic levels to inform your purchase.

- High availability (HA )- Even though a load-balancing solution can optimize server usage and maximize traffic flow, business continuity must be considered when choosing a provider.

- Total Cost of Ownership (TCO) – Budgeting for load balancing needs is vital to determine the initial cost of setting it all up and ongoing expenses like upgrades and contracts.

- Security – Load balancing helps the network handle more traffic, which provides even more points for hackers to target, which makes security considerations integral in a business growth strategy.

Final Thought: What About Containers?

Code capsules, or containers, require container load balancing to provide traffic management services for containerized workloads or apps. Containers are now used by developers to swiftly test, distribute, and scale applications using continuous integration and continuous delivery (CI / CD).

However, specialized traffic control is required for maximum performance due to container-based applications’ transitory and stateless aspects. Container orchestration technologies such as Kubernetes launch and manage containers. A load balancer placed in front of the Docker engine improves client request scalability and availability.

This guarantees that the microservices-based apps operating within the container continue to execute as expected. Load-balancing Docker containers enable the ability to upgrade a single microservice without causing downtime. Load balancers deployed in Docker containers allow numerous containers to be accessible on the same host port when containers are distributed across server clusters.